Combining Static and Dynamic Features for Multivariate Sequence Classification

This is a cover story for the research paper by Anna Leontjeva and Ilya Kuzovkin

Combining Static and Dynamic Features for Multivariate Sequence Classification

2016 IEEE International Conference on Data Science and Advanced Analytics (DSAA)

When it comes to a classification task it is quite common to think about two different feature categories: sequential, where each data sample is represented by one or many features with their values changing over time (time-series or dynamic features) and static data with each sample described by a set of features, each having a fixed value.

For almost any sequential dataset it is possible to extract static features out of temporal data. One of the examples of such an approach would be Fourier analysis on EEG signals that transforms signals of arbitrary length to a fixed-size frequency domain. In many real-life applications a dataset can already consist of instances that have features of both categories. For example, consider hospital data, where the age and gender of a patient are static features, while heartbeats recorded from the electrodes over some period of time are dynamic features. Despite the fact that both static and dynamic features may contribute to the classification, they are rarely used together. One of the reasons is that most machine learning methods are not suitable for processing static and dynamic data simultaneously. Such discriminative algorithms as Random Forest, Support Vector Machines, feedforward neural networks take static features as an input. For sequence classification, common methods are variations of Hidden Markov Models, Dynamic Time Warping (DTW) and Recurrent Neural Networks [8].

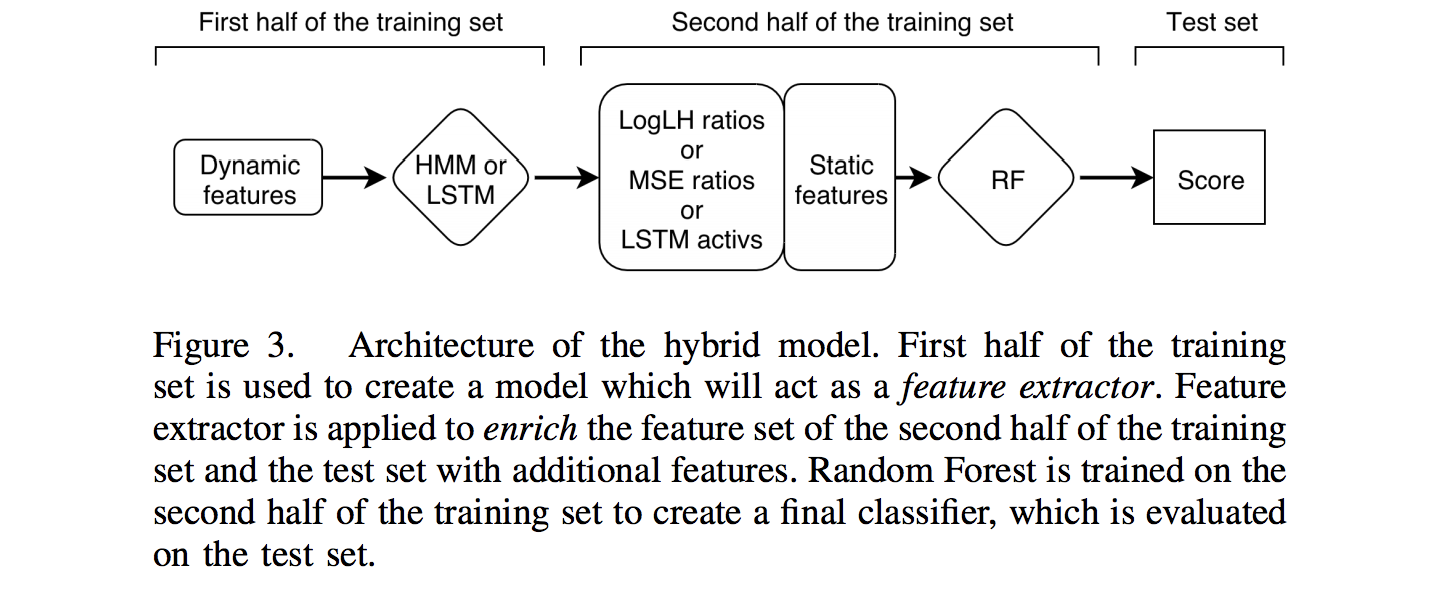

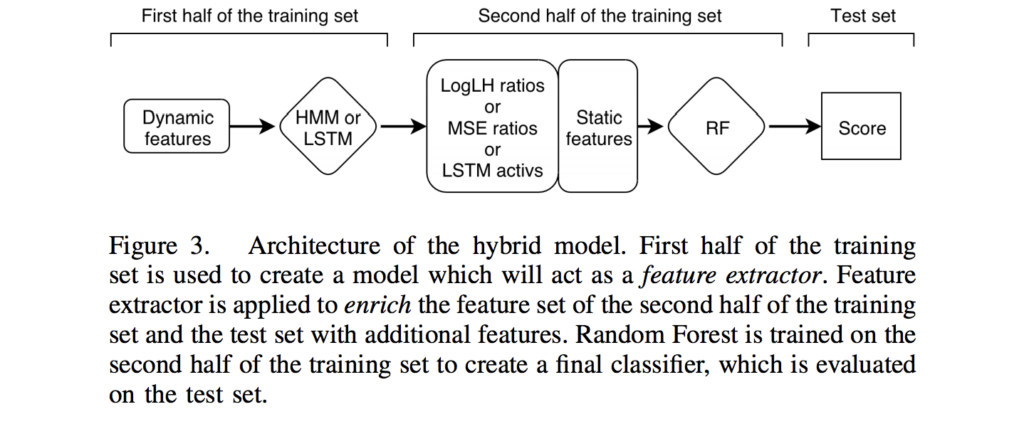

In this paper, we investigate whether there is a better way for extracting useful information from both data modalities to improve the overall classification performance. We devise a data augmentation technique where static features are concatenated with the data representation provided by a dynamic model. We refer to such an approach as hybrid and show that the hybrid way of stacking models is in general more beneficial than ensemble methods.

- We find that combining temporal and static information can boost classification performance.

- We compare different ways to combine static and dynamic features and propose a hybrid approach that employs an unconventional way of concatenating features.

- We empirically demonstrate that a hybrid method outperforms ensemble and other baseline methods on several public datasets.

- We perform a controlled experiment on a synthetic dataset to investigate how dataset characteristics affect the baseline, ensemble and hybrid methods’ performance

Publication in IEEE DSAA 2016 proceedings: https://ieeexplore.ieee.org/document/7796887

Preprint in arXiv: https://arxiv.org/abs/1712.08160

Implementation on GitHub: https://github.com/annitrolla/Generative-Models-in-Classification

No comments yet.