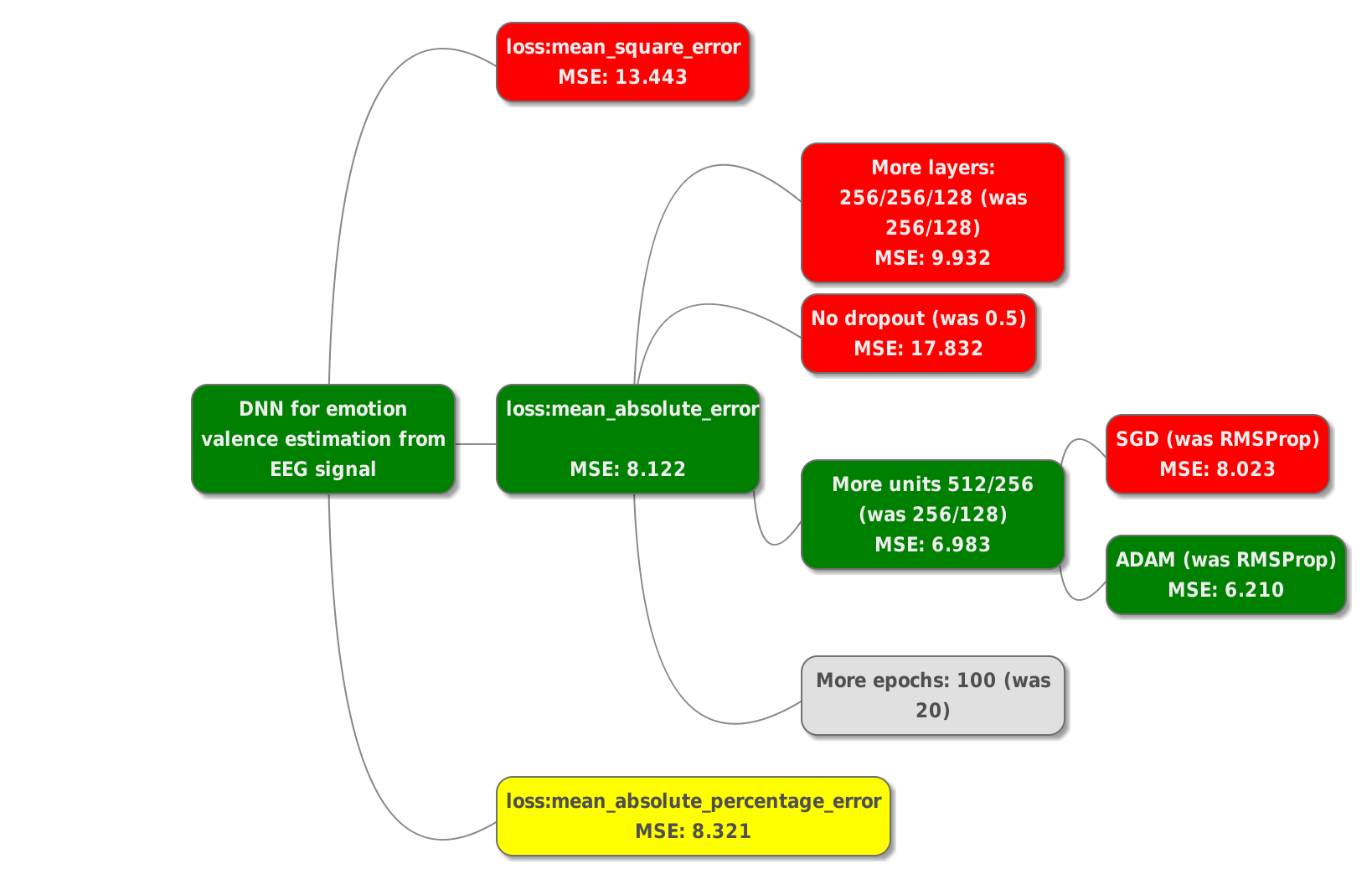

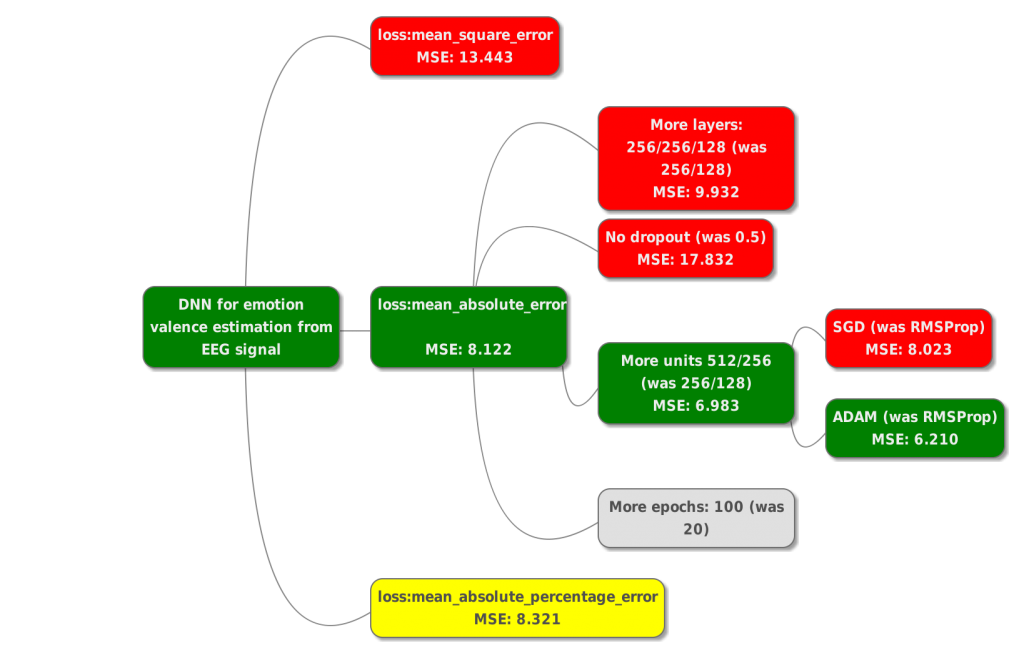

Using a MindMap in Informal DNN Parameter Optimization

So you’ve decided to throw a Deep Neural Network at your data. Great! You try a basic setup and it kinda works, but you are curious what will happen if you add, say, additional third layer…

“Did it get better? Not sure.. lets add another one. Ow, not it is worse. What if I keep three layers, but increase their size?… Somewhat better, good! Now I can try another optimizer… wait, I probably have to write down what I’ve tried so far.”If you’ve trained deep neural networks then the situation above will feel familiar. So you create a spreadsheet to keep track of what you’ve tried and the results you’ve got. And it is all good until you decide that you need to try yet another parameter and maybe even try different preprocessing. Now you have to redesign your spreadsheet, probably add tabs for different preprocessing methods… and the spreadsheet becomes quite unusable.

A mindmap ended my struggles:

- No need to decide on the number of parameters in advance and no need to foresee the exploration branching.

- Color-code the leafs to mark promising configurations (green), dead-ends (red), unclear cases (yellow) and ideas to try (gray).

- You can easily see which promising configuration branches were left unexplored and pick up from there.

- No problem creating new branching points at any leaf.

The image created with www.mindmup.com.

mindmAp or mindmUp? 😉

mindmAp, but http://www.mindmUp.com