Notes on ICML 2016

Day 1

Tutorial: Causal Inference for Observational Studies

The topic of causal inference was strongly presented this year. The reason for that was, however, unclear — the methodology is the same as it was before and no major breakthroughs were reported to justify the popularity of the topic. To me, the only plausible explanation is that the problem of causality started to matter to a larger group of people.

Intro

Does X cause Y or Y cause X? Rubin-Neyman causal model is the classical framework that is still used. There are two types of data points: the measured (presented in the data) and the counterfactual. We treat the measured data as the source and the counterfactual as the unlabelled, target data. ITE — individual treatment effect. ATE — average treatment effect.

Tools of the trade

- Matching: based on closest counterfactual twin (nearest neighbors).

- Train two models: a) under treatment, b) control group and measure ITE as the difference between the predictions of these two models.

- For example, if the model is linear: \(Y_t(x) = \beta^T x + \gamma t + \epsilon\), then \(\text{ITE} = Y_1(x) – Y_0(x)\) and \(\gamma\) is the measure of the effect of the treatment.

- If reality is not linear, then our effect estimate is off by an arbitrary amount.

- Joint model estimation is preferred as opposed to fitting treatment and control models separately.

- Propensity score (works only for ATE)

- Causal graphs

- Causal forest

Tutorial: Memory Networks for Language Understanding (by Jason Weston)

Intelligent Conversational Agents

The ultimate goal is to build an intelligent conversational agent. Supposedly ML end-to-end system is the way to achieve that. The most promising method at the moment is a memory network:

- Large memory to read/write and a component that learns when to do that

- Reasoning with Attention over Memory (RAM)

bAbi tasks is just a first step, eventually a good model has to work on a real data as well. LSTM with attention can remember for a somewhat longer time. The talk followed closely the MemNN paper and felt somewhat outdated.

Tutorial: Rigorous Data Dredging: Theory and Tools for Adaptive Data Analysis

Differential privacy framework for holdout

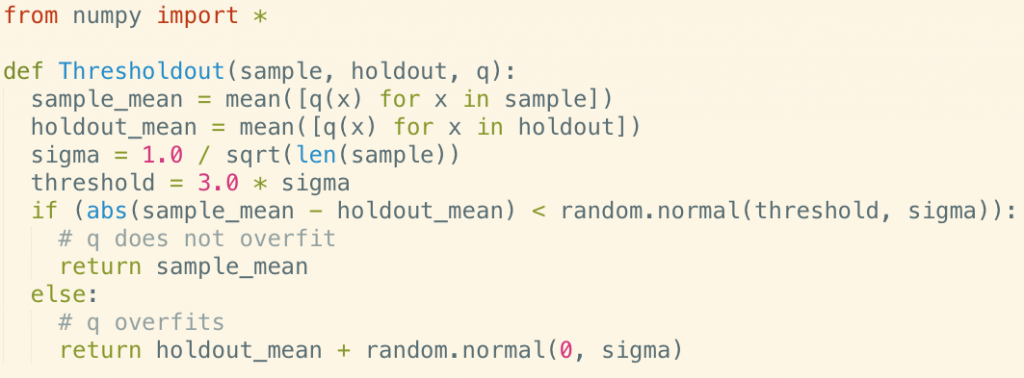

Makes more effective use of the data by allowing to reuse the holdout set. Provides statistical guarantees that we are allowed to do that without overfitting. Let’s take the usual case when you need to evaluate a model on the holdout set. The idea here is to have a wrapper function, that will decide whether we really need to evaluate the model on the holdout set, or we can do that on the training set and still have a truthful estimate. The wrapper function \(\text{Thresholdout}()\) will run the model \(q()\) on the training set and on the holdout set to estimate, say, accuracy, but it will not report the result to us right away — if the statistics of the \(q(X_\text{training})\) are same as the statistics of the \(q(X_\text{holdout})\) up to a \(\epsilon\) then the method will report the accuracy estimated on the training set. And only in the special case when the statistics are different it will use the holdout set with some noise added to the estimate. See the code snippet. [Slides]

Tutorial: Recent Advances in Non-Convex Optimization and its Implications to Learning (by Anima Anandkumar)

Escaping saddle points

Saddle points are the major problem in optimization of neural networks (and of other ML methods). There are lots of saddle points: take two optimal solutions — those are critical points (gradient = 0), combine them — you get a critical point, but it is not necessary optimal — it is a saddle point. Neural nets are very prone to that problem as there are as many optimal solutions as there are permutations of neurons. How do we solve that problem?

- SGD helps escape saddle points automatically due to the randomness.

- Newton’s method converges to a saddle point if used naively (ignoring the sign). Solution: use usual GD until the gradient is smalluse heuristics to decide what that means and then apply the Newton’s method, but take only solutions with negative values. If there no such values in the hessian — you are in the local minimum (and not at a saddle point).

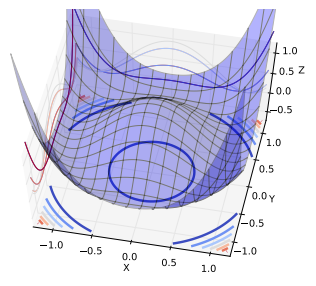

- However, there are saddle points where all Hessian values are positive. “Wine bottle” surface is an example. To solve such case you need to go to the 3rd order to determine if that degenerative Hessian is local optimum or a saddle point (they have a paper how to do that). Fourth-order local optimum is NP hard. Even approximate solution is not found. The general question — how to escape saddle points of higher orders fast? It is an open research question.

Escaping local optima

After you’ve dealt with saddle points you are faced with the problem of local optima. Spectral optimization. Matrix eigenanalysis provides solutions where eigenvectors are the critical points. We can extend that method to tensors, but it becomes a lot harder — there are exponentially more eigenvectors \(e^d\) and thus exponentially more saddle points. You can use tensor decomposition instead of maximal likelihood for unsupervised learning tasks, which supposedly has better error surface. Perturbation analysis — how adding noise to tensors affects eigenvalues computation? This is explored for 2D matrices, but not so much for moreD ones. Has applications like 3D Latent Dirichlet Analysis to look at triples of words.

Conclusion: using better objective functions (like tensor decomposition here) is one possible way to avoid local optima. There are practical examples where using this alternative objective function leads to better likelihood, even while optimization function was not likelihood: github.com/FurongHuang/SpectralLDA-TensorSpark, tensor decomposition applied to sentence embedding task (same as word embeddings, but on sentences). Also possible to learning representations using spectral methods as the alternative to NN-learned representations. Applications to supervised learning: training a neural network with tensors (see Beating the Perils of Non-Convexity: Guaranteed Training of Neural Networks using Tensor Methods).

[Slides]

www.offconvex.org

facebook.com/nonconvex

Day 2

Keynote: Causal Inference for Policy Evaluation (by Susan Athey)

A very soft presentation about the use of causal inference. One interesting experiment about user preferences when clicking on the Google search results: the top link is clicked 25% of the time, while the 3rd link is clicked 7%. But if you move the first (the actual best) link to the 3rd position, it is clicked 12%.

Session: Neural Networks & Deep Learning

| Google DeepMind One-Shot Generalization in Deep Generative Models DRAW. |

Learning to Generate with Memory Variational Auto-encoders with memory — improvements on MNIST generation: the number of meaningless characters generated is smaller. |

A Theory of Generative ConvNet Generative model derived from generative ConvNet. Class-specific weight matrices. Pretty conviencing generated texture images. |

| Texture Networks: Feed-forward Synthesis of Textures and Stylized Images Yet another texture generator. This one works two orders of magniture (~100x-500x) faster. Similar approach can be applied for image stylization. Allows for real-time (8 frames/sec) stylization of video stream from a web cam. |

Discrete Deep Feature Extraction: A Theory and New Architectures Lipschitz continuityread more. Feature importance evaluation. |

Deep Structured Energy Based Models for Anomaly Detection Instead of training RBMs to minimize the energy they train a DNN with energy being the output. Applicable to sequential data — Recurrent EBM. Convolutional EBM. |

| Noisy Activation Functions Just an interesting concept. |

Day 3

Keynote: A Quest for Visual Intelligence (by Fei-Fei Li)

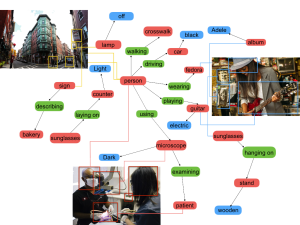

Historical roadmap of computer vision. Cooper kitten experiment — vision is learned depending on the environment — therefore, an artificial vision system should be learned, not crafted. Human vision is very contextual — same unrecognizable blob can be categorized by a human once placed into a context. LSTM for caption generation. See dense captioning paper for the current state of caption generation. There is a new dataset [!]: www.visualgenome.org to investigate image and language models. Object localization and categorization in real time on a video stream.

Historical roadmap of computer vision. Cooper kitten experiment — vision is learned depending on the environment — therefore, an artificial vision system should be learned, not crafted. Human vision is very contextual — same unrecognizable blob can be categorized by a human once placed into a context. LSTM for caption generation. See dense captioning paper for the current state of caption generation. There is a new dataset [!]: www.visualgenome.org to investigate image and language models. Object localization and categorization in real time on a video stream.

Test of Time Award: Dynamic Topic Modeling

Based on LDA, but adds time (topic drift) into the picture. You can see how topic-specific words change over the time.

Session: Neural Networks and Deep Learning

| Strongly-Typed Recurrent Neural Networks At each new time step a RNN works in a slightly new basis, but in practice we ignore that and manipulate the vectors (which actually are in different spaces) as usual. Here they change a LSTM a bit, such that this common sense violation would not happen. Achieved via minor changes in the LSTM gate equations. No win performance-wise. A useful article to read: An Empirical Exploration of RNN Architectures evaluates 10,000 architectures. |

A Convolutional Attention Network for Extreme Summarization of Source Code The idea: take the source code of a method and predict how that method should be named. When the programmers give names to their methods they put semantic meaning into it. Training a model on such data could extract summarized description of what a code snipped does. No examples demonstrated, so probably does not work too well. |

Richard Socher Ask Me Anything: Dynamic Memory Networks for Natural Language Processing The most accurate model on the bAbi dataset at the moment — 93.6 average accuracy. Marginally outperforms bunch of other methods on sentiment analysis tasks. Same for POS tagging. |

| Meta-Learning with Memory-Augmented Neural Networks Learning to learn. Based on NTM. Memory is used to store the inputs that appeared before and if the same input is presented again in an input sequence the correct class label can be read out from the memoryisn’t that overfitting?. |

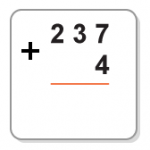

Zaremba (OpenAI), Mikolov Learning Simple Algorithms from Examples  The task of addition: the input is given in a grid, where the numbers are positioned as in the addition by the column method task (see the picture). The algorithm can decide what part of the input in needs to access at the next time step and also decides whether it is ready to output the answer or it needs to read more input. Solved via reinforcement learning. Learns a bunch of algorithms with 90-100% accuracy: copy, reverse, single digit multiplications, 2 and 3 row addition. Trained on the sequences of length of up to 100 characters using curriculum learning. Does not generalize infinitely (length-wise). Read also [!] Neural Programmer-Interpreters. |

CryptoNets: Applying Neural Networks to Encrypted Data with High Throughput and Accuracy We would like to hide our data from a service provider — Homomorphic encryption. SEAL library. Proof-of-concept on MNIST: encrypt inputs and labels and then train on the encrypted data — the server never learns any of the data. Latency and memory footprint problems: 15x memory and about one minute to classify one instance. 99% accuracy. |

Day 4

Session: Neural Networks and Deep Learning

Generative Adversarial Text to Image Synthesis

Generate images from text. Looks reasonable and even quite cool on COCO dataset. Autoencoding beyond pixels using a learned similarity metric

Autoencoding beyond pixels using a learned similarity metric

The idea: use convnet features as a basis for similarity measure. Combining a variational autoencoder (VAE) with generative adversarial network (GAN). Role of VAE: x -> Encode -> z -> Decode -> x’. Role of GAN: x’ vs. x -> discriminator. Feature-wise reconstructions on the celebrity faces dataset looks somewhat better than the usual pixel-wise. One other idea how to quantitative test the goodness of reconstruction by an autoencoder — take an additional network and train it on the reconstructed images. The presented reconstruction method leads to a better performance of that additional network.Isn’t it because of the fact that the features VAEGAN extracts are the same which are used by an additional network to classify? Preserving them trivially leads to better results on that metric.Exploiting Cyclic Symmetry in Convolutional Neural Networks

If we have rotation-invariant data then introducing that prior could make NN’s job easier. Only 90 deg cases🙁 considered in this work.

| A Deep Learning Approach to Unsupervised Ensemble Learning Stacking RBMs increases the conditional independence between the inputs as layers go deeper. They propose an algorithm to decide how many RBMs should be stacked to reach conditional independence. |

From Softmax to Sparsemax: A Sparse Model of Attention and Multi-Label Classification Enforces sparsity of the output (label) layer. Marginal improvements on real datasets, but for attention mechanisms provides narrower set of attended objects — more compact and interpretable. |

Scalable Gradient-Based Tuning of Continuous Regularization Hyperparameters How to find good hyperparameters faster? An idea: adjusting hyperparameters during the training. Computes the derivative of the cost wrt hyperparameters. Works only for numerical parameters like weight decay and additive noise, not applicable to number of nodes, architecture, etc. |

Keynote: Mining Large Graphs: Patterns, Anomalies, and Fraud Detection by Christos Faloutsos

A motivational talk about large graphs and the kind of knowledge that can be extracted from them.

Day 5: Workshops

Workshop: Deep Learning Workshop

The workshop was planned to be in an unusual format: the organizers posed a question and invited speakers prepared 20-minute talks. However it seemed that the speakers still used the opportunity to talk on the workshop to speak mostly about their stuff.

Q1: What is deep learning in the small data regime?

| Harri Valpola Unsupervised learning needs to learn the tricks of the brain:

Bioinspired AI research — http://www.thecuriousaicompany.com/research |

Anima Anandkumar Using non-linear moments (see the notes on the tutorial on Day 1) to train neural networks. Provides some performance guarantees. On some tasks is demonstrated to converge on a good solution using a smaller number of samples. |

Joelle Pineau Dialog systems, healthcare. Four tips on applying deep learning in small data regime:

|

| Brenden Lake How to develop an algorithm with human-like learning capacities. Omniglot dataset. Bayesian Program Learning to generate new characters. Their methods produces Omniglot characters that are indistinguishable (by a human rater) from the characters generated by human. Good. Now — how to scale all that to human-like performance or tasks, what is the next step after the Omniglot? Yet another dataset? How do we reach higher level of abstraction? |

Leon Bottou Small data — solve with transfer learning. But actually, for the transfer learning you still need a large data to learn the features, so not exactly “small data” regime. |

Panel Discussion Although the topic of the panel was supposed to be about small data and deep learning it kept deviating to transfer learning, neuroscience, etc. Which probably indicates that there is not much to say about the topic itself. |

Workshop: Deep Learning Visualization

| nVidia DIGITS The existing list of visualization tools: data preparation, visual examination, Caffe .prototxt / Torch7 visualization, learning curves, confusion matrices, classification rates, top images for a category, weight and activation visualization per layer. Tools they want to implement: visual network editor (Caffe GUI Tool, TensorBoard), visualization of the weights and gradients change over time, visualization of each neuron’s features. There are plans to extend DIGITS to work with other data types (currently images only) and DL frameworks. |

Multifaceted Feature Visualization: Uncovering the Different Types of Features Learned By Each Neuron in Deep Neural Networks Multifaceted neuron — a neuron, which has multiple receptive fields. It is interesting to visualize all meaningful facets of an artificial neuron. That’s what they do — generate images that turn some particular neuron on. Use backpropagation to obtain an image (starting from noise image). Problem is that such images often are non-informative. To fix that they change the objective to maximize activation & minimize local variance of an image at the same time — better results, but all facets tend to be same, but that also can be fixed. The final algorithm is:

Same idea can be applied to hidden neurons as well to get more extensive understanding of the features the hidden neurons represents. [!] With higher layers variation between facets increases. Relation to neuroscience? Do higher layer neurons have wider receptive fields? |

Human Attention in Visual Question Answering: Do Humans and Deep Networks Look at the Same Regions? [!]Both an artificial question answering system and a human are presented with a same image-question pairs. They explore the attention of an artificial and how it compares to the attention of a human. www.cloudcv.org/vqa  |

| A New Method to Visualize Deep Neural Networks Explaining individual classifier decisions via visualizations. [!] How much each pixel provides for classification. Drop each of the features and look how the performance changes — that methods assumes that features are not correlated. They propose few improvements: conditional sampling (on surrounding units), removing patches of pixels instead of a single pixel, better visualization. |

Day 6: Workshops

Workshop: Data-Efficient Machine Learning

| Joelle Pineau Data Efficient Learning for EEG Signals using Spectral, Temporal and Spatial Information Epilepsy. Neurostimulation. In vitro. Reinforcement learning approach. Now in vivo with EEG: turn the signal into “image” and hit it with convolutional nets combined with LSTM. CHBMIT dataset. Their group also works on sleep stage classification. |

Dropout in RNNs It is possible and can be beneficial. |

Andrew Gelman Toward Routine Use of Informative Priors Weakly informative priors. Provided an example of estimating the parameters of two data distributions which are 2x apart — without a prior does not work, but just adding N(0, 1) prior makes it much better. What about the models with huge number of parameters — what prior should be there? |

| Structuring Receptive Fields In Convolutional Networks Convnets are good at classification, segmentation, they are OK when we need stability — there are ways to intentionally or unintentionally perturb them, they are bad when dealing with limited data. Wavelet Scattering Networksread more are very data efficient. Lipschitz continuous representationread more. They combine CNNs with Scattering Networks. |

Conclusions

Compared to the previous year the conference felt disoriented. Last year (ICML’15 in Lille) the fascination by the deep learning was almost palpable — novel ideas, novel applications, visionary panels and discussions, all that gave the feeling “look, this deep learning stuff is really something!”, also the other trends, like bayesian optimization, gaussian processes and few more were strong and inspiring. But that was last year. This time half of the talks seemed to go along the “we all know that deep learning is cool, let’s apply it to the problem X… just because why not…” line. Is it because all the fresh stuff was presented at ICLR?…

Of course that does not apply to every talk — as you’ve seen there were remarkable and interesting presentations as well. The overall trending topics seemed to be:

- One-shot learning

- Memory networks

- Causal inference

- Attention mechanisms

- Learning to learn

- Spark

No comments yet.