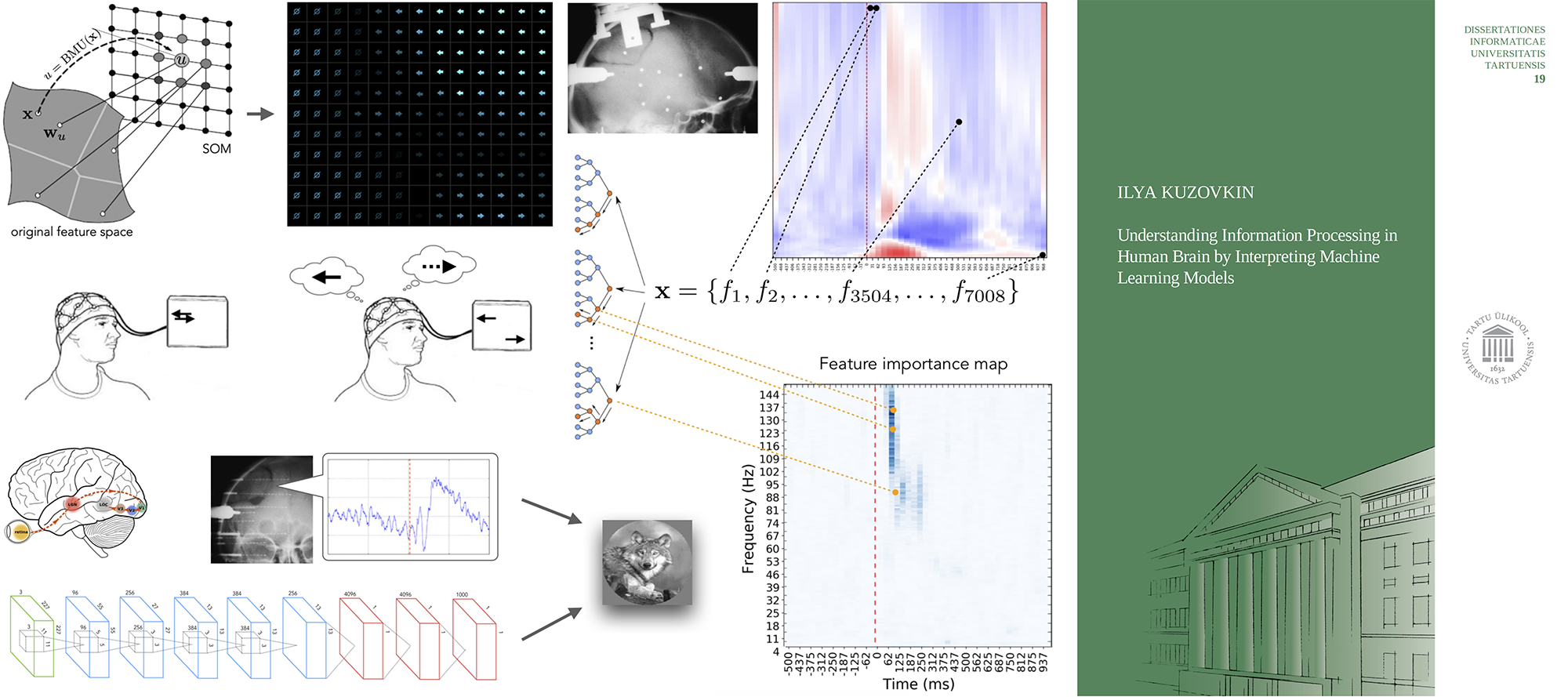

My PhD Thesis “Understanding Information Processing in Human Brain by Interpreting Machine Learning Models”

It has been a very long time since humans began to use their reasoning machinery — the brain, to reason, among other things, about that same reasoning machinery itself. Some claim that such self-referential understanding is impossible to attain in full, but others are still trying and call it Neuroscience. The approach we take is the very same we use to understand almost any other phenomenon — observe, collect data, infer knowledge from the data and formalize the knowledge into elegant descriptions of reality. In neuroscience we came to refer to this later component as modeling. Many aspects of the phenomenon in question were addressed and explained using this approach by neuroscientists over the years. Some aspects remain unexplained, some others even unaddressed.

Entering the era of digital computing allowed us to observe and collect data at ever-growing rate. The amount of data gave rise to the need, and the increase in computational power provided the means, to develop automatic ways of inferring knowledge from data, and the field of Machine Learning was born. In its essence it is the very same process of knowledge discovery that we have been using for years: a phenomenon is observed, the data is collected, the knowledge is inferred and a formal model of that knowledge is created. The main difference being that now a large portion of this process is done automatically.

Neuroscience is traditionally a hypothesis-driven discipline, a hypothesis has to be put forward first, before collecting and analyzing the data that will support or invalidate the hypothesis. Given the amount of work that is required to complete a study, the reason for the process being set up in this way has a solid ground. However, with the new ways of automatic knowledge discovery the time that is required to complete the process has decreased and the balance between hypothesis-driven and exploratory, data-driven, approach is starting to change. In this work we put forward the argument that machine learning algorithms can act as automatic builders of insightful computational models of neurological processes. These methods can build models that rely on much larger arrays of data and explore much more complex relationships than a human modeler could. The tools that exist to estimate model’s generalization ability act as a test of model’s elegance and applicability to the general case. The human effort can thus be shifted from manually inferring the knowledge from data to interpreting the models that were produced automatically and articulating their mechanisms into intuitive explanations of reality.

A video recording of the defense procedure at the Institute of Computer Science of University of TartuIn Chapter 1 of the thesis we explore the history of the symbiosis between the fields of neuroscience and machine learning, evidencing the fact that those areas of scientific discovery have a lot in common and discoveries in one often lead to progress in another. Chapter 2 explores more formally what would it take to create an intuitive description of a neurological process from a machine-learned model. We discuss a subfield of interpretable machine learning, that provides the tools for in-depth analysis of machine learning models. When applied to neural data, it makes those models into a source of insights about the inner workings of the brain. We then propose a taxonomy of machine learning algorithms that is based on the internal knowledge representation a model relies on to make its predictions.

In the following chapters 3, 4 and 5 we provide examples of scientific studies we have conducted to gain knowledge about human brain by interpreting machine learning models trained on neurological data. The studies present the applicability of the described approach on three different levels of neural organization: Chapter 1 shows how the analysis of a decoder trained on human intracerebral recordings leads to a better understanding of category-specific patterns of activity in human visual cortex. Chapter 2 compares the structure of human visual system with the structure of an artificial system of vision by quantifying the similarities between knowledge representations these two systems use. The final chapter makes a step into even higher level of abstraction and employs topology-preserving dimensionality reduction technique in conjunction with real-time visualization to explore relative distances between human subject’s mental states.

With this work we aim to demonstrate that machine learning provides a set of readily available tools to facilitate automatic knowledge discovery in neuroscience, make a step forward in our ways of creating computational models, and highlight the importance and unique areas of applicability of exploratory data-driven approach to neuroscientific inquiry.

Links and materials

The text of the thesis at arXiv: https://arxiv.org/abs/2010.08715.

Manuscript at the repository of University of Tartu: https://dspace.ut.ee/handle/10062/68435.

Presentation slides: https://ilyakuzovkin.com/materials/slides/Ilya-Kuzovkin-PhD-Understanding-Brain-via-Machine-Learning-Models.pdf.

And also at SlideShare https://www.slideshare.net/iljakuzovkin/understanding-information-processing-in-human-brain-by-interpreting-machine-learning-models-phd-thesis-defense.

A video recording of the defense procedure can be seen directly here above or at https://www.uttv.ee/naita?id=30480.

No comments yet.