Graz BCI Conference 2014: Notes

The main reason I was eager to attend this conference is my personal confusion about the future of BCI research. On one hand we have EEG technology, which is cheap, but does not allow you to control more than fourpersonal opinion actions reliably. On the other hand we have invasive methods like ECoG and intracortical electrodes, which allow to do so much more, but you need a surgery to use them.  For a researcher with a vague intention to use BCI in practice the dilemma is obvious: either widely applicable but weak EEG or powerful but invasive techniques. The conference in Graz has a strong bias towards EEG technology. By the end of it I hope to have enough information to decide whether my own research should focus on EEG or I should abandon it in favor of the invasive stuff.

For a researcher with a vague intention to use BCI in practice the dilemma is obvious: either widely applicable but weak EEG or powerful but invasive techniques. The conference in Graz has a strong bias towards EEG technology. By the end of it I hope to have enough information to decide whether my own research should focus on EEG or I should abandon it in favor of the invasive stuff.

Day 0

We arrived a day earlier so we had an additional day to explore the city. Graz is very beautiful. We have visited main tourist locations and the city center and it felt very good. There are hills in and around the city, the “old town” is not a small location somewhere, but the whole left side of the river: old churches, beautiful houses and funny landscape. The right side, it seems, was populated later, so the architecture is simple and the governing mood is not that sublime.

Day 1

University campus occupies quite large piece of land, the buildings are just practical gray boxes made of concrete and glass. However there are lots of them, rooms and buildings are well equipped so you get a quite strong feeling that the university has money and there are lots of things happening in here.

g.tec Workshop

Lots of presentation about their projects and products. Nothing really new. Got to ask about dry electrodes vs. usual wet ones and it seems that in a peaceful environment there is no much difference. However dry electrodes react to movements much more that wet ones, so in case you want to use g.Nautilus (portable thingy) while walking then you are much better with wet ones.

Lots of presentation about their projects and products. Nothing really new. Got to ask about dry electrodes vs. usual wet ones and it seems that in a peaceful environment there is no much difference. However dry electrodes react to movements much more that wet ones, so in case you want to use g.Nautilus (portable thingy) while walking then you are much better with wet ones.

Another thing I noticed is that thought-based BCI, which is the most cool thing you imagine when you think about BCIs, is not even mentioned as a viable option. All projects are based on motor imagery (this is kind of specific brain activity, is it not the same as just thinking about abstract things), P300, SSVEP, etc.

During the lunch I had a discussion with people from g.tec regarding the limitations of EEG. They agreed that EEG has reached some kind of limit and the idea to find a magical algorithm, which will allow to extract significantly more data out of EEG signal, looks obscure.

Their P300 speller was demonstrated. It seems that P300-based things are the most popular approaches in current BCI applications. It is reliable, ~easy to implement and works pretty fast (~25 inputs per minute).

mindBEAGLE is a tool to determine whether the patient is conscious or not (coma, vegetative state). It is, again, based on P300 response and has three modes: sensory (vibrations in patient’s arms), auditory and visual. Imagine completely disabled patient who has no means of communicating with you. You put an EEG cap on his head, attach vibrating thingies to his left and right arms and start the system. The system will start to issue 3 types of stimuli: weak vibration in any arm, strong vibration in left arm, strong vibration in right arm. The strong vibration will occur much less frequently that the weak one. If patient is conscious he/she will start to anticipate the strong vibration and once it will finally happens the P300 response is generated by patient’s brain. If you observe that there is a significant P300 response after a strong vibration you will know that the patient is aware of different vibrations and is concentrating on the strong ones. After that, assuming that the patient can hear you, you can set the communication scheme so that to answer yes the patient will have to concentrate on strong vibrations in his right arm and for no in vibration in the left arm.

Very massive P300-based project called BackHome aims at making it possible for disabled people to come back home form the hospital. It is a general framework to interface any device (like smart home, TV, etc) with P300 target matrix, making it possible for the patient to choose between different activities and offering a context dependent target matrix.

OpenVibe Workshop

The following applications are all done using OpenVibe at their core.

Webapps for real time neurophysiology by Louis Mayaud.

Commercial EEG processing system build on top of the OpenVibe project: MENSIA Technologies.

BCI-based control of a JACO robotic arm with OpenViBE by Laurent Bougrain.

Quite an unclear presentation about combining OpenVibe and a robotic arm.

• JACO robotic arm (~50,000$)

• Control with motor imaginary: different combinations of {left, right, foot}

• Robot Operating System

BCI could make old two-player games even more fun: a proof of concept with “Connect four” by Jérémie Mattout.

• Based on the P300 speller principle: look at the point where you want to put your token.

• Worked well with proper EEG, so they decided to try with Emotiv EPOC: you loose some speed and accuracy, but it still works.

• All done in OpenVibe, can be used with any supported neuroimaging device.

• You can add Python Box in OpenVibe to run any code you want.

Unsupervised P300 spelling by Pieter-Jan Kindermans.

• There is a standard stimulus/iteration procedure in all ERP-based BCIs

• They did unsupervised approach, where P300 parameters are being learnt continuously in the process.

• It is kind of an adaptive system.

General feeling after the workshops is that the research done in the field is scientifically very soft. Most of the challenges are technical. Maybe that is because most of the talks were given by students. Let’s see what will happen during the main conference and judge then.

Day 2

European BCI Day

BCI projects and companies will talk about who they are and what they do.

mBrainTrain

EEG Cap + Mobile phone

MindSee

Samuel Kaski, Aalto University, Finland

Search engine, which … em… somehow incorporates biophysical measurements to make search more relevant to the user.

NEBIAS

Neurocontrolled upper limb prosthesis with sensory feedback. EMG for controlling. http://www.nebias-project.eu

Wearable interfaces for hand function recovery (WAY)

More mature EMG-based prosthetic of upper limb with sensory feedback.

Also they attempt to use EEG (ocular signals) to control the exoskeleton.

The project looks cool by itself but is not very relevant to the concept of EEG-based BCI.

Day 3

Finally the conference itself. It features 35+ talks and ~100 posters, has 189 participants, presentation acceptance rate is 84.4%

Finally the conference itself. It features 35+ talks and ~100 posters, has 189 participants, presentation acceptance rate is 84.4%

Keynote

Towards Robust, Pervasive BCIs by Scott Makeig from University of California San Diego

What needs to happen so that EEG would work?

• People are trying to interpret what they see on EEG too much. You can demonstrate that you can get very spectacular scalp-surface signal pattern with just two sources in the brain oscillating with 9Hz and 10Hz. ICA makes a lot of sense in that contextZ.Akalin & S. Makeig 2013.

• Not combining informative featuresfind an article about these for relevant sources

• Not combining brain and body information: we need to incorporate knowledge about brain into the paradigms. It makes sense to combine all the information about what is happening to the body to understand which parts of the signal is related to which activity and extract cognitive activity by canceling processes related to side activities.

• We assume that the phenomena we count on are always there, but there are experiments which show that even P300 does no appear every time (gets cancelled by the activity of other sources?). So again, we need to look at the activity of a specific source, not at the averaged activity. States of arousal and attention change from minute to minute, assuming fixed baseline is not the best strategy.

• User must adapt to the BCI as the BCI adapts to the subject’s activity (this is, by the way, exactly the topic of my master thesis)

• Estimate electrode locations from data between sessions.

What is the upper bound of EEG-based BCI performance?

Cristopher Koethe & Scott Makeig, 2011 is an article with extensive tryout of different ML tools (not only algorithms) to explore their properties.

His guidelines for building a robust BCI:

• Build electrical forward head model

• Unmix signal sources

• Use different features for each sources

• Estimate electrode positions from the data

• Build mapping between sources and channels

• Adaptive training

• Regularize BCI models using (lots of) data form other data

• Track bci model evolution over time

• Include information about body activity to cancel out what you know where comes from and you don’t need

• Use knowledge about the brain from neuroscience

Methods to try: OSR-LARS, Informative Features Selection,

Toolboxes in which they implement ideas mentioned above: BCILAB Toolbox [LINK], MoBI Lab [LINK]

This was the first science-oriented way of thinking presented here. Finally a motivating talk!

Talks

Analyzing EEG Source Connectivity with SCoT by Martin Billinger from Institute for Knowledge Discovery, Graz

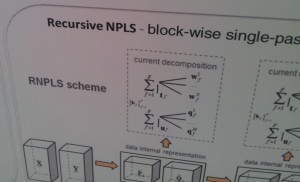

Structural connectivity, functional connectivity, effective connectivity — causal interactions. Source Connectivity Toolbox (SCoT) (opensource, github) supports bunch of different connectivity measures. Vector autoregressive modeling. CPS instead of PCA.

Transferring Unsupervised Adaptive Classifiers Between Users of a Spatial Auditory Brain-Computer Interface by Pieter-Jan Kindermans from Ghent, Belgium

Unsupervised learning to learn in the process of using the system. Transfer learning: build model form several users a use it for a new user. You can combine transfer learning with unsupervised learning, obtaining a system, which will start from general model and then adapt it to the current user. The method is used with AMUSE (auditory spacial BCI) setup. The point he makes is that combined system is better than just unsupervised, however usual supervised still rules…

Motor Imagery Brain-Computer Interfaces: Random Forests vs Regularized LDA – Non-linear Beats Linear by David Steyrl

Linear vs. non-linear for BCI? Popular debate in 2000th resulting in the claim that simpler model is better (BCI datasets usually have few trials and lots of features). Random Forest is the solution to all your problems! Test made on motor imaginary on 10 patients, 100 trial of training, 60 trials of testing (not CV), 6 filters per CSP, 1sec window. Random Forest is ~2% better than LDA for binary classification task. Summary: turns out that non-linear classifiers are not that evil 🙂

The Comparison of Cortical Neural Activity Between Spatial and Non-spatial Attention in ECoG Study by Taekyung Kim from Hanyang

Attention. Compare spatial vs. non-spatial in ECoG setup. Experimental setup asked to pay attention to position (spacial) or to the pattern (non-spatial) of the stimulus image. Output: they are different: in spatial task the parietal cortex is the most active, in a non spatial task broader ares of the are activated.

Airborne Ultrasonic Tactile Display Brain–computer Interface Paradigm by Katsuhiko Hamada from Tokyo

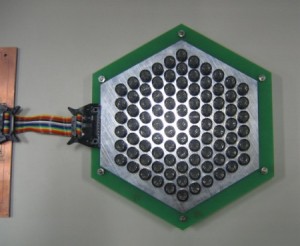

Tactile BCI using ultrasound contactless stimulation of the skin. EEG is used to classify area of tactile sensation on a hand using P300. [AUTD picture]

Classification of error-related potentials in EEG during continuous feedback by Martin Spüler

Different types of errors elicit different brain response. Is it detectable from EEG? They tried to differentiate between two types of error “I made an error” and “machine made an error”. The accuracy is 75%.

My idea here is to incorporate this technique into my adaptive interactive scheme somehow.

Articles to read: Milekovic 2012, 2013

Poster Session I

• Lots of P300 applications

• Some SSVEP applications

• Overoptimistic projects about reconstructing hand movements from EEG

Everything was easy, and, as before, mostly inclined toward applications and technology rather than towards theoretical science.

Day 4

Keynote

Sweet spots for BCI implants: the matter of finding and interfacing by Nick Ramsey from UMC Utrecht, Netherlands

Current use of implants is:

• Cochlear implants (impaired hearing)

• Retinal implant for impaired vision

• Neurostimulators (Parkinson, epilepsy, tinnitus)

• Psychiatry (depression, OCD)

John Donoghue is currently the state of the art.

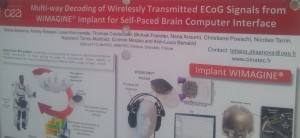

There is a funny idea to tattoo EEG electrodes on the skin. ECoG recording is used for locating epilepsy and removing it afterwards. ECoG workshop before SfN each year. ECoG on blood vessel is 2 times worse, on a dura is it 10 times more noisy. Even low number of false positives is a problem for a real life application. They selected not the “best” electrodes, but the ones with the lower FPr.

There diversity of functions that fit inside one voxel is very high. This is why usual 3 Tesla fMRI is not enough: in a single voxel you might have all the activity related to hands, arms, legs, knees, etc. 7 Tesla fMRI are much better and you already can decode different fingers. Can one decode a sign language? In any case the similar idea might be relevant for ECoG: high density ECoG grid has lots of small electrodes located close to each other. They tried it and the granularity of detail was as high as in a 7T fMRI scanner. Moreover, the ECoG does not have the BOLD-related lag and the signal is less blurred (whatever that means).

By stimulating electrodes on dense grid they were able to control tongue movements (muscles): up, down, to the side. Yes, muscles in the tongue were activated in a specific way by stimulating specific areas of the brain. What about decoding? Classification of gestures (sign language) corresponding for 4 different letters gave 67% on average with SVM (the data recorded with 7T fMRI). Same experiment with ECoG gave somewhat similar results. Position of the ECoG grid matters. Same approach for classifying letters from brain activity corresponding to face muscles: ~80% for 4 letters.

It is funny that the two keynotes were exactly perfect for me: the first one was advocating EEG BCI, the second one was about ECoG BCI. Now I am not that sure that ECoG is unarguably better direction for BCI research…

Talks

Multiple roles of ventral premotor cortex in BCI task learning and execution by Jeremiah Wander

ECoG. High gamma. Correlations between two signals. Two parameters to measure 1) peak of correlation 2) lag. With the development of BCI control skill both parameters on specific (“control-like”) electrodes show significant changes. Can be used to explore cognitive abilities.

Multi-state driven decoding for brain-computer interfacing by Hohyun Cho

Accuracy variation over sessions of BCI is a problem. You can fight is with using robust features, adapt the classifierLI and Guan 2006, Li 2010, Sugiyama 2007, rejecting bad trials. 36 different classifiers on different subsets of data and then use voting for a decision. Two class motor imagery with accuracy of 0.83.

Independent BCI Use in Two Patients Diagnosed with Amyotrophic Lateral Sclerosis by Elisa Mira Holz

P300 based painting program. Tested on professional painter who made an exhibition of his paintings made with EEG. Reported level of control is 70-90% and fatigue level between low and medium.

Discriminating Between Attention and Mind Wandering During Movement Using EEG by Filip Melinscak

Using ration of alpha to beta rhythms. Difference between the states can be found with 75% accuracy.

How Well Can We Learn With Standard BCI Training Approaches? A Pilot Study by Camille Jeunet

The traditional training scheme is proposed in [Pfurtscheller 2001]. Imagine using this paradigm to teach a child how to ride a bicycle. We need to apply psychological ideas of learning to BCI training:

• demonstrate to the test subject how the result should look like

• autonomy is important — let the test subject to explore the system

• self-paced exploration boosts learning

They attempted to apply BCI training paradigm to non-BCI task: draw circles or triangles (depending on a stimulus with a feedback) on a tablet. The demonstrated video shows that for this simple test test subject gets confused and is not able to achieve 100% accuracy. So maybe we need a better training paradigm? What would be the reasonable alternative?

Poster Session II

More machine learning oriented, I liked much more that the first poster session and got into several discussions about ML, EEG, SSVEP and Neural Networks.

Day 5

Talks

Decoding of Picture Category and Presentation Duration – Preliminary Results of a Combined ECoG and MEG Study by Tim Pfeiffer

ECoG is great, but surgery. Let’s look what we can achieve with MEG. Test subject is presented with pictures from 3 categories. SVM for 3 classes resulted in accuracy of ~90% with ECoG data and ~70% MEG data.

Algorithms to try: Davies-Bouldin Index for feature selection.

Towards Cross-Subject Workload Prediction by Carina Walter

Application to education. Analyze the mental state of the student to guide the learning process. Task was to solve 240 simple math problems with increasing difficulty. Theta (3-7) and alpha (8-13) bands are most important for workload detection. Correlation between frequency powers and estimated task difficulty on cross-subject dataset.

Poster Session III

Events yet to happen

That’s it, conference is over, now a trip to Styrian vineyards will happen…

I still need to contemplate on the big question about EEG vs. Intracranial.

Supported by the Tiger University Program of the Information Technology Foundation for Education

Comments (2) for post “Graz BCI Conference 2014: Notes”